# qdel -p jobID

References:

Linux Toolkits Blog is a scratch-pad of tips and findings on Linux

# qdel -p jobID

# qstat --version Version: 4.2.7 Commit: xxxxxxxxxxxxxxxxxxxxxx

# ls -ld /home drwx------ 7 root root 8192 Dec 22 15:13 home

# chmod 755 /home drwxr-xr-x 7 root root 8192 Dec 22 15:13 home

# engine-log-collector INFO: Gathering oVirt Engine information... INFO: Gathering PostgreSQL the oVirt Engine database and log files from localhost... Please provide the REST API password for the admin@internal oVirt Engine user (CTRL+D to skip): About to collect information from 1 hypervisors. Continue? (Y/n): y INFO: Gathering information from selected hypervisors... INFO: collecting information from 192.168.50.56 INFO: finished collecting information from 192.168.50.56 Creating compressed archive...

# engine-log-collector --hosts=*.11,*.15

# engine-log-collector --no-hypervisorsReferences

# vim /etc/ssh/sshd_config

..... ClientAliveInterval 60 .....

# vim ~/.ssh/config

..... ServerAliveInterval 60 .....

# chown username.usergroups myfile.

# chown user.name:usergroups myfile

[epel] ..... ..... mirrorlist=http://mirrors.fedoraproject.org/metalink?repo=epel-6&arch=$basearch [epel-debuginfo] ..... ..... mirrorlist=http://mirrors.fedoraproject.org/metalink?repo=epel-debug-6&arch=$basearch

# tar -zxvf udunits-2.1.24 # cd udunits-2.1.24 # ./configure --prefix=/usr/local/udunits-2.1.24 CC=gcc CXX=g++ # make # make install

# tar -zxvf antlr-2.7.7 # antlr-2.7.7

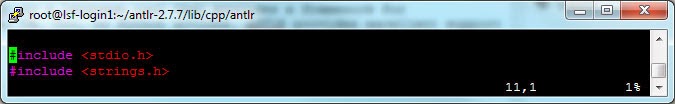

# vim /root/antlr-2.7.7/lib/cpp/antlr/CharScanner.hpp

# ./configure --prefix=/usr/local/antlr2.7.7 --disable-examples # make -j 8 # make install

# cd /etc/yum.repos.d/ # wget -O /etc/yum.repos.d/slc6-devtoolset.repo http://linuxsoft.cern.ch/cern/devtoolset/slc6-devtoolset.repo # yum install devtoolset-2 --nogpgcheck # scl enable devtoolset-2 bash

# cd /etc/yum.repos.d/ # wget -O /etc/yum.repos.d/slc5-devtoolset.repo http://linuxsoft.cern.ch/cern/devtoolset/slc5-devtoolset.repo # yum install devtoolset-1.1 # scl enable devtoolset-1.1 bash

connecting to sesman ip 127.0.0.1 port 3350 sesman connect ok sending login info to session manager, please wait... xrdp_mm_process_login_reponse: login successful for display started connecting connecting to 127.0.0.1 5910 error - problem connecting

...... [20141118-23:53:40] [ERROR] X server for display 10 startup timeout [20141118-23:53:40] [INFO ] starting xrdp-sessvc - xpid=2998 - wmpid=2997 [20141118-23:53:40] [ERROR] X server for display 10 startup timeout [20141118-23:53:40] [ERROR] another Xserver is already active on display 10 [20141118-23:53:40] [DEBUG] aborting connection... [20141118-23:53:40] [INFO ] ++ terminated session: username root, display :10.0 .....

# yum install tigervnc-server

# service xrdp restart

| STATUS | DESCRIPTION |

| ok | Host is available to accept and run new batch jobs |

| unavail | Host is down, or LIM and sbatchd are unreachable. |

| unreach | LIM is running but sbatchd is unreachable. |

| closed | Host will not accept new jobs. Use bhosts -l to display the reasons. |

| unlicensed | Host does not have a valid license. |

$ bhosts -l HOST node001 STATUS CPUF JL/U MAX NJOBS RUN SSUSP USUSP RSV DISPATCH_WINDOW closed_Adm 60.00 - 16 0 0 0 0 0 - CURRENT LOAD USED FOR SCHEDULING: r15s r1m r15m ut pg io ls it tmp swp mem root maxroot Total 0.0 0.0 0.0 0% 0.0 0 0 28656 324G 16G 60G 3e+05 4e+05 Reserved 0.0 0.0 0.0 0% 0.0 0 0 0 0M 0M 0M 0.0 0.0 processes clockskew netcard iptotal cpuhz cachesize diskvolume Total 404.0 0.0 2.0 2.0 1200.0 2e+04 5e+05 Reserved 0.0 0.0 0.0 0.0 0.0 0.0 0.0 processesroot ipmi powerconsumption ambienttemp cputemp Total 396.0 -1.0 -1.0 -1.0 -1.0 Reserved 0.0 0.0 0.0 0.0 0.0 aa_r aa_r_dy aa_dy_p aa_r_ad aa_r_hpc fluentall fluent fluent_nox Total 17.0 25.0 128.0 10.0 272.0 48.0 48.0 50.0 Reserved 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 gambit geom_trans tgrid fluent_par Total 50.0 50.0 50.0 193.0 Reserved 0.0 0.0 0.0 0.0

$ bhosts -X HOST_NAME STATUS JL/U MAX NJOBS RUN SSUSP USUSP RSV comp027 ok - 16 0 0 0 0 0 comp028 ok - 16 0 0 0 0 0 comp029 ok - 16 0 0 0 0 0 comp030 ok - 16 0 0 0 0 0 comp031 ok - 16 0 0 0 0 0 comp032 ok - 16 0 0 0 0 0 comp033 ok - 16 0 0 0 0 0

# bhosts -l comp067 HOST comp067 STATUS CPUF JL/U MAX NJOBS RUN SSUSP USUSP RSV DISPATCH_WINDOW ok 60.00 - 16 0 0 0 0 0 - CURRENT LOAD USED FOR SCHEDULING: r15s r1m r15m ut pg io ls it tmp swp mem root maxroot Total 0.0 0.0 0.0 0% 0.0 0 0 13032 324G 16G 60G 3e+05 4e+05 Reserved 0.0 0.0 0.0 0% 0.0 0 0 0 0M 0M 0M 0.0 0.0 processes clockskew netcard iptotal cpuhz cachesize diskvolume Total 406.0 0.0 2.0 2.0 1200.0 2e+04 5e+05 Reserved 0.0 0.0 0.0 0.0 0.0 0.0 0.0 processesroot ipmi powerconsumption ambienttemp cputemp Total 399.0 -1.0 -1.0 -1.0 -1.0 Reserved 0.0 0.0 0.0 0.0 0.0 aa_r aa_r_dy aa_dy_p aa_r_ad aa_r_hpc fluentall fluent fluent_nox Total 18.0 25.0 128.0 10.0 272.0 47.0 47.0 50.0 Reserved 0.0 0.0 0.0 0.0 0.0 0.0 0.0 0.0 gambit geom_trans tgrid fluent_par Total 50.0 50.0 50.0 193.0 Reserved 0.0 0.0 0.0 0.0 LOAD THRESHOLD USED FOR SCHEDULING: r15s r1m r15m ut pg io ls it tmp swp mem loadSched - - - - - - - - - - - loadStop - - - - - - - - - - - root maxroot processes clockskew netcard iptotal cpuhz cachesize loadSched - - - - - - - - loadStop - - - - - - - - diskvolume processesroot ipmi powerconsumption ambienttemp cputemp loadSched - - - - - - loadStop - - - - - -

| STATUS | DESCRIPTION |

| ok | Host is available to accept and run batch jobs and remote tasks. |

| -ok | LIM is running but RES is unreachable. |

| busy | Does not affect batch jobs, only used for remote task placement (i.e., lsrun). The value of a load index exceeded a threshold (configured in lsf.cluster.cluster_name, displayed by lshosts -l). Indices that exceed thresholds are identified with an asterisk (*). |

| lockW | Does not affect batch jobs, only used for remote task placement (i.e., lsrun). Host is locked by a run window (configured in lsf.cluster.cluster_name, displayed by lshosts -l). |

| lockU | Will not accept new batch jobs or remote tasks. An LSF administrator or root explicitly locked the host using lsadmin limlock, or an exclusive batch job (bsub -x) is running on the host. Running jobs are not affected. Use lsadmin limunlock to unlock LIM on the local host. |

| unavail | Host is down, or LIM is unavailable. |

| unlicensed | The host does not have a valid license. |

# pkill -u user

$ kill -9 -l

# vim /etc/httpd/conf.d/ssl.conf

SSLProtocol all -SSLv3 -SSLv2

# service httpd restart

# ls /var/crash/127.0.0.1-2012-11-21-09\:49\:25/ vmcore vmcore-dmesg.txt # cp /var/crash/127.0.0.1-2012-11-21-09\:49\:25/vmcore-dmesg.txt /tmp/00123456-vmcore-dmesg.txt

# yum install haproxy

#--------------------------------------------------------------------- # Global settings #--------------------------------------------------------------------- global # to have these messages end up in /var/log/haproxy.log you will # need to: # # 1) configure syslog to accept network log events. This is done # by adding the '-r' option to the SYSLOGD_OPTIONS in # /etc/sysconfig/syslog # # 2) configure local2 events to go to the /var/log/haproxy.log # file. A line like the following can be added to # /etc/sysconfig/syslog # # local2.* /var/log/haproxy.log # log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4500 user haproxy group haproxy daemon stats socket /var/lib/haproxy/stats #--------------------------------------------------------------------- # common defaults that all the 'listen' and 'backend' sections will # use if not designated in their block #--------------------------------------------------------------------- defaults timeout queue 1m timeout connect 60m timeout client 60m timeout server 60m # ------------------------------------------------------------------- # [RDP Site Configuration] # ------------------------------------------------------------------- listen cattail 155.69.57.11:3389 mode tcp tcp-request inspect-delay 5s tcp-request content accept if RDP_COOKIE persist rdp-cookie balance leastconn option tcpka option tcplog server win2k8-1 192.168.6.48:3389 weight 1 check inter 2000 rise 2 fall 3 server win2k8-2 192.168.6.47:3389 weight 1 check inter 2000 rise 2 fall 3 option redispatch listen stats :1936 mode http stats enable stats hide-version stats realm Haproxy\ Statistics stats uri /

Unable to open socket connection to xcatd daemon on localhost:3001. Verify that the xcatd daemon is running and that your SSL setup is correct.

127.0.0.1 localhost.localdomain localhost

$ ssh -X somehost.com

$ xauth list

..... current-local-server:17 MIT-MAGIC-COOKIE-1 395f7b22fb6087a29b5fb1c9e37577c0 .....

$ xauth list | cut -f1 -d\ | xargs -i xauth remove {}

$ env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

If the output of the above command looks as follows:

vulnerable

this is a test

$ env x='() { :;}; echo vulnerable' bash -c "echo this is a test"

bash: warning: x: ignoring function definition attempt

bash: error importing function definition for `x'

this is a test

Starting haproxy: [WARNING] 265/233231 (20638) : config : log format ignored for proxy 'load-balancer-node' since it has no log address. [ALERT] 265/233231 (20638) : Starting proxy load-balancer-node: cannot bind socket

# netstat -anop | grep ":3389" tcp 0 0 0.0.0.0:3389 0.0.0.0:* LISTEN 20606/xrdp off (0.00/0/0)

# service xrdp stop

# service haproxy

Sep 17 12:00:00 node1 sshd[4725]: error: PAM: Authentication failure for user2 from 192.168.1.5 Sep 17 12:00:01 node1 adclient[7052]: WARN audit User 'user2' not authenticated: while getting service credentials: No credentials found with supported encryption

# service /etc/init.d/centrifydc restart # service /etc/init.d/centrify-sshd restart

# wget http://download.dokuwiki.org/src/dokuwiki/dokuwiki-stable.tgz # tar -xzvf dokuwiki-stable.tgzStep 2: Move dokuwiki files to apache directory

# mv dokuwiki-stable /var/www/html/docuwikiStep 3: Set Ownership and Permission for dokuwiki

# chown -R apache:root /var/www/html/dokuwiki

# chmod -R 664 /var/www/html/dokuwiki/

# find /var/www/html/dokuwiki/ -type d -exec chmod 775 {} \;

Step 4: Continue the installation http://192.168.1.1/docuwiki/install.php

Ignore the security warning, we can only move the data directory after installing.

fill out form and click save

Step 5: Delete install.php for security

# rm /var/www/html/dokuwiki/install.phpStep 6: Create and move data, bin (CLI) and cond directories out of apache directories for security Assuming apache does not access /var/www, only /var/www/html and /var/cgi-bin secure dokuwiki (or use different directory):

# mkdir /var/www/dokudata # mv /var/www/html/dokuwiki/data/ /var/www/dokudata/ # mv /var/www/html/dokuwiki/conf/ /var/www/dokudata/ # mv /var/www/html/dokuwiki/bin/ /var/www/dokudata/Step 7: Update dokuwiki where the conf directory

# vim /var/www/html/dokuwiki/inc/preload.php

<?php

// DO NOT use a closing php tag. This causes a problem with the feeds,

// among other things. For more information on this issue, please see:

// http://www.dokuwiki.org/devel:coding_style#php_closing_tags

define('DOKU_CONF','/var/www/dokudata/conf/');

* Note the comments why there is no closing php

Step 8: Update dokuwiki where the data directory is

# vim /var/www/dokudata/conf/local.php

$conf['savedir'] = '/var/www/dokudata/data/';Step 9: Set permission for dokuwiki again for the new directory with same permissions

# chown -R apache:root /var/www/html/dokuwiki

# chmod -R 664 /var/www/html/dokuwiki/

# find /var/www/html/dokuwiki/ -type d -exec chmod 775 {} \;

# chown -R apache:root /var/www/dokudata

# chmod -R 664 /var/www/dokudata/

# find /var/www/dokudata/ -type d -exec chmod 775 {} \;

Step 10: Go to wiki http://192.168.1.1/docuwiki/install.php